Or why Dragonfly is winning hearts of developers…

| Tags | About |

I talked in my previous post about Redis eviction policies. In this post, I would like to describe the design behind Dragonfly cache.

Let’s talk about the simplicity of Redis. Redis was initially designed as a simple store, and it seems that its APIs achieved this goal. Unfortunately, Redis’s simple design makes it unreliable and difficult to manage in production.

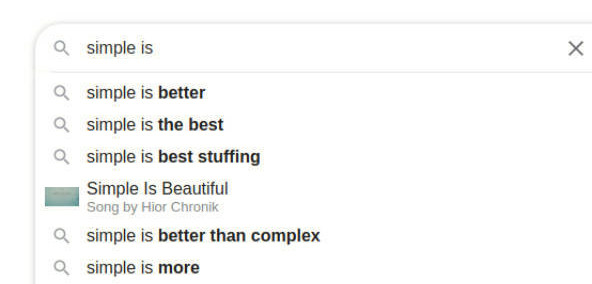

So the question is - what simplicity means to you as a datastore user?

Following my previous post, we are going start with the “hottest potato” - single-threaded vs multi-threaded argument.

During the last 13 years, Redis has become a truly ubiquitous memory store that has won the hearts of numerous dev-ops and software engineers. Indeed, according to StackOverflow survey in 2021, Redis is the most loved database for the 5th time in a row and is at the top of db-engines ranking, way before the next contestant. But how well does Redis utilizes modern hardware systems? Will it stay competitive in a few years without reinventing itself?

In part 1 of my tutorial I’ve explained behind the scenes of a typical mapreduce framework. In this section we will go over GAIA-MR and will show why it’s more efficiant than other open-source frameworks.

I’ve recently had a chance to benchmark GAIA in Google cloud. The goal was to test how quickly I can process compressed text data (i.e read and uncompress on the fly) when running on a single VM and reading directly from cloud storage. The results were quite surprising.

There are many Java-based mapreduce frameworks that exist today - Apache Beam, Flink, Apex are to name few.

GAIA-MR is my attempt to show advantages of a C++ over other languages in this domain. It’s currently implemented for a single machine but even with this restriction I’ve seen up-to 3-7 times reduction in cost and running time vs current alternatives.

Please note that the single machine restriction put a hard limit on how much data we can process, nethertheless GAIA-MR shines with small-to-medium size workloads (~1TB). This part gives an introduction about mapreduce in general.

A small introduction to fiber-based programming model based on Boost.Fibers library

Lately, there are many discussions in the programming community in general and in c++ community in particular on how to write efficient asynchronous code. Many concepts like futures, continuations, coroutines are being discussed by c++ standard committee but not much progress was made besides very minimal support of C++11 futures.

On the other hand, many mainstream programming languages progressed quicker and adopted asynchronous models either into a core language or popularized it via standard libraries. For example, Python (yield) and Lua use coroutines to achieve asynchronisity, java uses continuations and futures, golang and Erlang use green threads, and C and Javascript use callback based actor models. However, C++ historically lacked the official support for asynchronous programming, which forced the community to introduce ad-hoc frameworks and libraries that provided this functionality.

I would like to share my opinion on what I think will be the best direction for asynchronous models in C++ by reviewing two prominent frameworks: Seastar and Boost.Fiber. This (opinionated) post reviews Seastar.